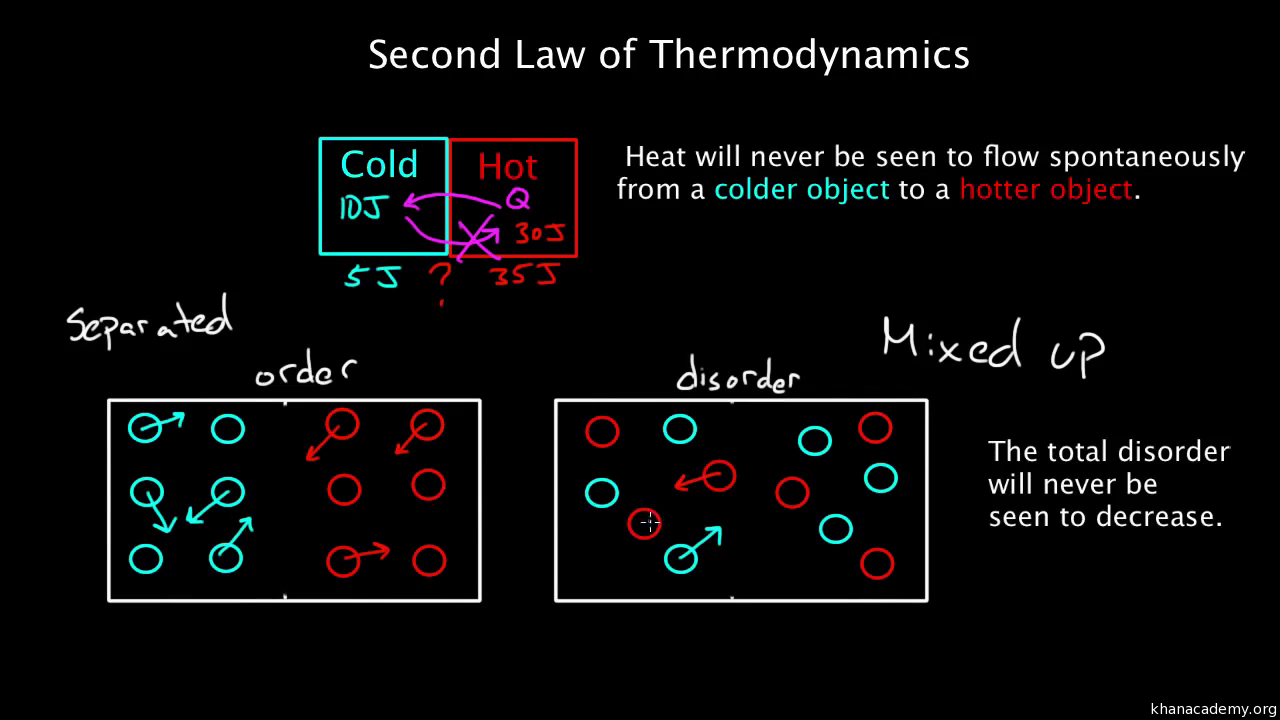

Total entropy of starting materials 187 + 192 = 379 J K -1 mol -1Īs expected, a significant decrease.In fact, we can calculate the numerical value of the entropy change from the figures in the table above (see Introducing entropy): The two gases forming a solid clearly involve a decrease in entropy, yet the reaction occurs spontaneously. Hydrogen chloride and ammonia gases diffuse along a tube and react to produce a ring of solid ammonium chloride. You would have no difficulty in deciding which one is being played in reverse – randomly arranged fragments do not spontaneously arrange themselves into an ordered configuration. For instance, the reaction of diamond (pure carbon) with oxygen to form carbon dioxide is feasible (spontaneous) but we do not have to worry about jewellery burning in air at room temperature – ‘diamonds are forever’! Illustrating the second law So a reaction may be feasible (spontaneous) but occur so slowly that in practice it does not occur at all. The terms have nothing to do with the rate of a process. The word ‘feasible’ is also used to mean the same as ‘spontaneous’. So processes that involve spreading out and increasing disorder are favoured over those where things become more ordered – students may have noticed this in their bedrooms! This is called the second law of thermodynamics, and is probably the most fundamental physical law. Since processes take place by chance alone, and this leads to increasing randomness, we can say that in any spontaneous process (one that takes place of its own accord and is not driven by outside influences) entropy increases.

S solids < S liquids < S gasses The second law of thermodynamics Observe the not all solids have smaller entropy values than all liquids nor do all liquids have smaller values than all gases. The natural logarithm, ln, also has the effect of scaling a vast number to a small one – the natural log of 10 -23 is 52.95, for example. In the expression above, k has the effect of scaling the vast number W to a smaller, more manageable number. Where W is the number of ways of arranging the particles that gives rise to a particular observed state of the system, and k is a constant called Boltzmann’s constant which has the value 1.38 x 10 -23 J K -1. (By a ‘state’, we mean an observable situation, such as a particular number of particles in each of two boxes.)Īs the numbers of particles increases, the number of possible arrangements in any particular state increases astronomically so we need a scaling factor to produce numbers that are easy to work with.

Scientists have a mathematical way of measuring randomness – it is called ‘entropy’ and is related to the number of arrangements of particles (such as molecules) that lead to a particular state. However, you may find it helpful to read any accompanying instructions, observations and conclusions relating to the simulations below. The interactive ‘simulations’ for this tutorial are currently unavailable. RSC Yusuf Hamied Inspirational Science Programme.Introductory maths for higher education.

0 kommentar(er)

0 kommentar(er)